Leaping over the language barrier with machine translation in Levantine Arabic

When a language you don’t understand appears in your Facebook news feed, you can click a button and translate it. This kind of language technology offers a way of communicating not just with the millions of people who speak your language, but with millions of others who speak something else.

Or at least it almost does.

Like so many other online machine translation systems, it comes with a caveat: it is only available in major languages.

TWB is working to eliminate that rather significant caveat through our language technology initiative, Gamayun. We named it after a mythical birdwoman figure in Slavic folklore — she is a magical creature that imparts words of wisdom on the few who can understand her. We think she’s a perfect advocate for language technology to increase digital equality and improve two-way communication in marginalized languages.

We have reached an important Gamayun milestone by leaping over the language barrier with a machine translation engine in Levantine Arabic. Here is how we got here, what we learned, and what is next.

What is behind developing a machine translation engine in Levantine Arabic?

In November 2019, we joined forces with a group of innovators and language engineers from PNGK and Prompsit to address WFP’s Humanitarian Action Challenge. Our goal was to use machine translation to enhance the way aid organizations understand the needs and concerns of Syrian refugees, to improve food security programming.

So we developed a text-to-text machine translation (MT) engine for Levantine Arabic tailored to the specifics of refugees’ experiences. To achieve this, we collaborated with Mercy Corps’ Khabrona.Info team. The team runs a Facebook page for Syrian Arabic refugees to provide them with reliable information and answers, such as about accessing food and other support. We took content shared on the Khabrona.Info Facebook page and manually translated it into English to adapt the engine. The training data and a demonstration version of our MT are available on our Gamayun portal.

How well does this machine translation engine perform?

To answer this question, we conducted an evaluation based on tests widely used by MT researchers. We found that our MT engine produced better translations for Levantine Arabic than one of the most used online machine translation systems.

We first asked experienced translators to rate the translations for both accuracy and fluency. We provided them with ten randomly selected source texts and translations generated by humans, Google’s MT, and our MT. All translations were fairly good, with scores ranging from zero for no errors to three for critical errors. Our MT engine performed slightly better than Google’s MT because it was adapted to the specifics of Levantine Arabic and its online colloquialisms about food security and other topics relevant to refugees’ experiences. The human translations performed slightly better than our MT, but were not perfect.

We also asked the experienced translators to rank the best, second best, and worst translations based on each source text. While the human translations were consistently ranked higher than both machine translation engines, our MT was preferred 70% of the time over Google’s MT.

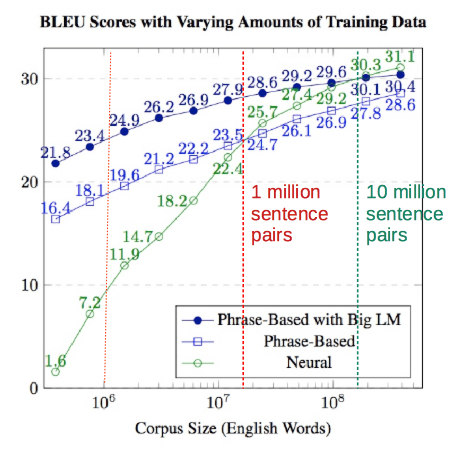

We then used the standard metric for automated MT quality testing called BLEU. The bilingual evaluation understudy scores an MT translation according to how well it matches a reference for human translation. Scores range from zero for no match to 1.0 for a perfect match, but few translations score 1.0 because all translators will produce slightly different texts. Our generic MT engine trained on publicly available parallel English-Arabic text obtained a 0.195 score on a testing set of 200 social media posts. With further training with a small but specific set for Levantine Arabic and its online colloquialisms, it reached a 0.248 score. Instead, the Google MT translations scored 0.212 on the same testing set.

Take the short sentence أسعار المواد الغائية مرتفعة as an example: humans translated it as “food is expensive” and our MT returned “food prices are high;” Google’s MT, instead, translated it as “the prices of the materials are high.” All are grammatically correct results, but our MT tended to better pick up the nuances of informal speech than Google’s MT. This may seem trivial, but it is critical if MT is used to quickly understand requests for help as they come up or keep an eye on people’s concerns and complaints to adjust programming.

What makes these results possible?

We specifically designed our MT engine to provide reliable and accurate translations of unstructured data, such as the language used in social media posts. We involved linguists and domain experts in collating and editing the dataset to train the engine. This ensured a focus on both humanitarian domain language and colloquialisms in Levantine Arabic.

The agility of this approach means the engine can be used for various purposes, from conducting needs assessments to analyzing feedback information. The approach also meets the responsible data management requirements of the humanitarian sector.

What have we learned?

We have demonstrated that it is possible to build a translation engine of reasonable quality for a marginalized language like Levantine Arabic and to do so with a relatively small dataset. Our approach entailed engaging with the native language community and focusing on text scraped from social media. This holds great potential for building language technology tools that can spring into action in times of crisis and be adapted to any particular domain.

We also learned that even human translations for Levantine Arabic are not perfect. This shows the importance of building networks of translators for marginalized languages who can help build up and maintain language technology. Where there are not enough—if any—professional translators, a key first step is training bilingual people with the right skills and providing them with guidance on humanitarian response terminology. This type of capacity building can not only make technology work for marginalized language speakers in the longer term, but also ensure they have access to critical information in their languages in the shorter term.

What’s next?

We are refining our approach, augmented by external support, to achieve the full potential of language technology. We are currently working with the Harvard Humanitarian Initiative and IMPACT Initiatives using natural language processing and machine learning to transcribe, translate, and analyze large sets of qualitative responses in multilingual data collection efforts to inform humanitarian decision making. We have also joined the Translation Initiative for COVID-19 (TICO-19), alongside researchers at Carnegie Mellon and major tech companies including Amazon, Facebook, Google, and Microsoft to develop and train state-of-the-art machine translation models in 37 different languages on COVID-19.

Stay tuned to learn how we move forward with these projects. We’ll continue to develop language technology solutions to enhance two-way communication in humanitarian crises and amplify the voices of millions of marginalized language speakers.

Written by Mia Marzotto, Senior Advocacy Officer for Translators without Borders.